AN codes

AN codes[1] are error-correcting code that are used in arithmetic applications. Arithmetic codes were commonly used in computer processors to ensure the accuracy of its arithmetic operations when electronics were more unreliable. Arithmetic codes help the processor to detect when an error is made and correct it. Without these codes, processors would be unreliable since any errors would go undetected. AN codes are arithmetic codes that are named for the integers  and

and  that are used to encode and decode the codewords.

that are used to encode and decode the codewords.

These codes differ from most other codes in that they use arithmetic weight to maximize the arithmetic distance between codewords as opposed to the hamming weight and hamming distance. The arithmetic distance between two words is a measure of the number of errors made while computing an arithmetic operation. Using the arithmetic distance is necessary since one error in an arithmetic operation can cause a large hamming distance between the received answer and the correct answer.

Contents |

Arithmetic Weight and Distance

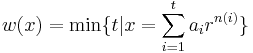

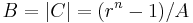

The arithmetic weight of an integer  in base

in base  is defined by

is defined by

where  <

<  ,

,  , and

, and  . The arithmetic distance of a word is upper bounded by its hamming weight since any integer can be represented by its standard polynomial form of

. The arithmetic distance of a word is upper bounded by its hamming weight since any integer can be represented by its standard polynomial form of  where the

where the  are the digits in the integer. Removing all the terms where

are the digits in the integer. Removing all the terms where  will simulate a

will simulate a  equal to its hamming weight. The arithmetic weight will usually be less than the hamming weight since the

equal to its hamming weight. The arithmetic weight will usually be less than the hamming weight since the  are allowed to be negative. For example, the integer

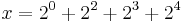

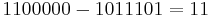

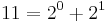

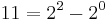

are allowed to be negative. For example, the integer  which is

which is  in binary has a hamming weight of

in binary has a hamming weight of  . This is a quick upper bound on the arithmetic weight since

. This is a quick upper bound on the arithmetic weight since  . However, since the

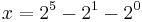

. However, since the  can be negative, we can write

can be negative, we can write  which makes the arithmetic weight equal to

which makes the arithmetic weight equal to  .

.

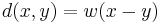

The arithmetic distance between two integers is defined by

This is one of the primary metrics used when analyzing arithmetic codes.

AN Codes

AN codes are defined by integers  and

and  and are used to encode integers from

and are used to encode integers from  to

to  such that

such that

<

<

Each choice of  will result in a different code, while

will result in a different code, while  serves as a limiting factor to ensure useful properties in the distance of the code. If

serves as a limiting factor to ensure useful properties in the distance of the code. If  is too large, it could let a codeword with a very small arithmetic weight into the code which will degrade the distance of the entire code. To utilize these codes, before an arithmetic operation is performed on two integers, each integer is multiplied by

is too large, it could let a codeword with a very small arithmetic weight into the code which will degrade the distance of the entire code. To utilize these codes, before an arithmetic operation is performed on two integers, each integer is multiplied by  . Let the result of the operation on the codewords be

. Let the result of the operation on the codewords be  . Note that

. Note that  must also be between

must also be between  to

to  for proper decoding. To decode, simply divide

for proper decoding. To decode, simply divide  . If

. If  is not a factor of

is not a factor of  , then at least one error has occurred and the most likely solution will be the codeword with the least arithmetic distance from

, then at least one error has occurred and the most likely solution will be the codeword with the least arithmetic distance from  . As with codes using hamming distance, AN codes can correct up to

. As with codes using hamming distance, AN codes can correct up to  errors where

errors where  is the distance of the code.

is the distance of the code.

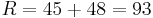

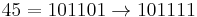

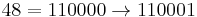

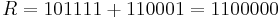

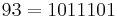

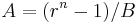

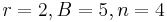

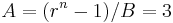

For example, an AN code with  , the operation of adding

, the operation of adding  and

and  will start by encoding both operands. This results in the operation

will start by encoding both operands. This results in the operation  . Then, to find the solution we divide

. Then, to find the solution we divide  . As long as

. As long as  >

> , this will be a possible operation under the code. Suppose an error occurs in each of the binary representation of the operands such that

, this will be a possible operation under the code. Suppose an error occurs in each of the binary representation of the operands such that  and

and  , then

, then  . Notice that since

. Notice that since  , the hamming weight between the received word and the correct solution is

, the hamming weight between the received word and the correct solution is  after just

after just  errors. To compute the arithmetic weight, we take

errors. To compute the arithmetic weight, we take  which can be represented as

which can be represented as  or

or  . In either case, the arithmetic distance is

. In either case, the arithmetic distance is  as expected since this is the number of errors that were made. To correct this error, an algorithm would be used to compute the nearest codeword to the received word in terms of arithmetic distance. We will not describe the algorithms in detail.

as expected since this is the number of errors that were made. To correct this error, an algorithm would be used to compute the nearest codeword to the received word in terms of arithmetic distance. We will not describe the algorithms in detail.

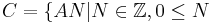

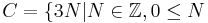

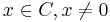

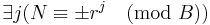

To ensure that the distance of the code will not be too small, we will define modular AN codes. A modular AN code  is a subgroup of

is a subgroup of  , where

, where  . The codes are measured in terms of modular distance which is defined in terms of a graph with vertices being the elements of

. The codes are measured in terms of modular distance which is defined in terms of a graph with vertices being the elements of  . Two vertices

. Two vertices  and

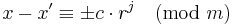

and  are connected iff

are connected iff

where  and

and  <

< <

< ,

,  . Then the modular distance between two words is the length of the shortest path between their nodes in the graph. The modular weight of a word is its distance from

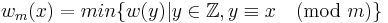

. Then the modular distance between two words is the length of the shortest path between their nodes in the graph. The modular weight of a word is its distance from  which is equal to

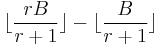

which is equal to

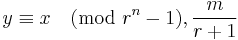

In practice, the value of  is typically chosen such that

is typically chosen such that  since most computer arithmetic is computed

since most computer arithmetic is computed  so there is no additional loss of data due to the code going out of bounds since the computer will also be out of bounds. Choosing

so there is no additional loss of data due to the code going out of bounds since the computer will also be out of bounds. Choosing  also tends to result in codes with larger distances than other codes.

also tends to result in codes with larger distances than other codes.

By using modular weight with  , the AN codes will be cyclic code.

, the AN codes will be cyclic code.

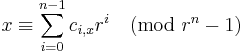

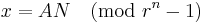

definition: A cyclic AN code is a code  that is a subgroup of

that is a subgroup of ![[r^n-1]](/2012-wikipedia_en_all_nopic_01_2012/I/c2e6c9ccd6c4d36ee74332250dc92eb8.png) , where

, where ![[r^n-1] = \{0,1,2,\dots,r^n-1\}](/2012-wikipedia_en_all_nopic_01_2012/I/2ba3e8d376ad229eeac8c6e9f2aac303.png) .

.

A cyclic AN code is a principle ideal of the ring ![[r^n-1]](/2012-wikipedia_en_all_nopic_01_2012/I/c2e6c9ccd6c4d36ee74332250dc92eb8.png) . There are integers

. There are integers  and

and  where

where  and

and  satisfy the definition of an AN code. Cyclic AN codes are a subset of cyclic codes and have the same properties.

satisfy the definition of an AN code. Cyclic AN codes are a subset of cyclic codes and have the same properties.

Mandelbaum-Barrows Codes

The Mandelbaum-Barrows Codes are a type of cyclic AN codes introduced by D. Mandelbaum and J. T. Barrows[2][3]. These codes are created by choosing  to be a prime number that does not divide

to be a prime number that does not divide  such that

such that  is generated by

is generated by  and

and  , and

, and  . Let

. Let  be a positive integer where

be a positive integer where  and

and  . For example, choosing

. For example, choosing  , and

, and  the result will be a Mandelbaum-Barrows Code such that

the result will be a Mandelbaum-Barrows Code such that  <

< in base

in base  .

.

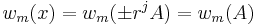

To analyze the distance of the Mandelbaum-Barrows Codes, we will need the following theorem.

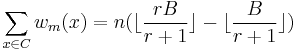

theorem: Let ![C \subset [r^n - 1]](/2012-wikipedia_en_all_nopic_01_2012/I/0019b5033bb9412c19b9ba89d70f0896.png) be a cyclic AN code with generator

be a cyclic AN code with generator  , and

, and

Then,

proof: Assume that each  has a unique cyclic NAF[4] representation which is

has a unique cyclic NAF[4] representation which is

We define an  matrix with elements

matrix with elements  where

where  and

and  . This matrix a essentially a list of all the codewords in

. This matrix a essentially a list of all the codewords in  where each column is a codeword. Since

where each column is a codeword. Since  is cyclic, each column of the matrix has the same number of zeros. We must now calculate

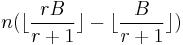

is cyclic, each column of the matrix has the same number of zeros. We must now calculate  , which is

, which is  times the number of codewords that don't end with a

times the number of codewords that don't end with a  . As a property of being in cyclic NAF,

. As a property of being in cyclic NAF,  iff there is a

iff there is a  with

with  <

< . Since

. Since  with

with  <

< , then

, then  <

< . Then the number of integers that have a zero as their last bit are

. Then the number of integers that have a zero as their last bit are  . Multiplying this by the

. Multiplying this by the  characters in the codewords gives us a sum of the weights of the codewords of

characters in the codewords gives us a sum of the weights of the codewords of  as desired.

as desired.

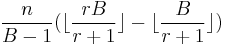

We will now use the previous theorem to show that the Mandelbaum-Barrows Codes are equidistant(which means that every pair of codewords have the same distance), with a distance of

proof: Let  , then

, then  and

and  is not divisible by

is not divisible by  . This implies there

. This implies there  . Then

. Then  . This proves that

. This proves that  is equidistant since all codewords have the same weight as

is equidistant since all codewords have the same weight as  . Since all codewords have the same weight, and by the previous theorem we know the total weight of all codewords, the distance of the code is found by dividing the total weight by the number of codewords(excluding 0).

. Since all codewords have the same weight, and by the previous theorem we know the total weight of all codewords, the distance of the code is found by dividing the total weight by the number of codewords(excluding 0).

See also

References

- ^ Peterson, W. W. and Weldon, E. J.: Error-correcting Codes. Cambridge, Mass.: MIT Press, 1972

- ^ Massey, J. L. and Garcia, O. N.: Error-correcting codes in computer arithmetic. In: Advances in Information Systems Science, Vol. 4, Ch. 5. (Edited by J. T. Ton). New York: Plenum Press, 1972

- ^ J.H. Van Lint (1982). Introduction to Coding Theory. GTM. 86. New York: Springer-Verlag.

- ^ Clark, W. E. and Liang, J. J.: On modular weight and cyclic nonadjacent forms for arithmetic codes. IEEE Trans. Info. Theory, 20 pp. 767-770(1974)